Multi-camera Torso Pose Estimation using Graph Neural Networks

Contributors: Daniel Rodriguez-Criado, Pilar Bachiller-Burgos, Pablo Bustos, George Vogiatzis and Luis J. Manso

This work is a first approximation to human pose estimation using Graph Neural Networks (GNNs). In this case, the model estimates only the human’s torso position and its orientation. (For full 3D pose estimation see next project).

Thus, we can define the pose as the person’s torso position on the floor plane and their orientation concerning the vertical axis:

Fig. 1 Torso detection in a real environment (first row) and in CoppeliaSim (second row).

This project employs both real and synthetic training data to generate a large dataset in a relatively short time, saving a considerable amount of resources. The hypothesis is that the data augmentation coming from simulation will contribute to improving results compared to training solely with real data. The first row of Fig 1. displays images of the real environment, while the second row shows a representation in CoppeliaSim of three views of the environment where the system has been tested. Both systems have the same camera setup with identical calibration, using an AprilTag in the same relative position as a reference for the origin of coordinates.

The model presented in this page makes use of graph neural networks to merge the information acquired from multiple camera sources, achieving a mean absolute error below

How does it work?

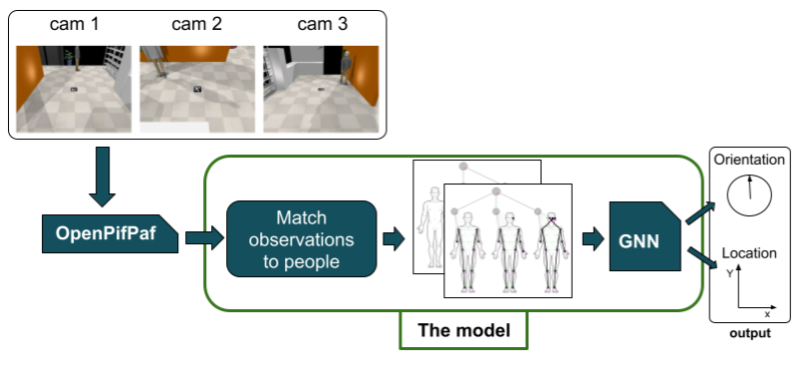

The whole process id divided into three main steps. Initially, images are acquired and processed using OpenPifPaf. It should be noted that any other 2D detector can be utilised during this step. The output data from this phase, a set of detected skeletons from different cameras, is passed on to the subsequent phase, where skeletons corresponding to the same person are matched and grouped. These groups are then supplied to a GNN, which generates the final output. The utilization of a GNN in this context is due to its adaptability to potential missing parts detected by the 2D detector, owing to the input dimension flexibility inherent in these networks. Moreover, the graph structures following the body’s anatomical form introduce a degree of bias into the network. This bias arguably aids in a better comprehension of the input data, potentially leading to improved results.

Fig. 2 Pipeline of the steps of the system for torso pose estimation.

How to use it?

If you want to test the model follow the next steps:

-

Clone the repository

Terminal window git clone https://github.com/vangiel/WheresTheFellow.gitcd WhereesTheFellow -

Install the required packages

-

Run the test script

Terminal window python3 test.py

Citation

To cite this work, use the following BibTex notation:

@inproceedings{rodriguez2020multi, title={Multi-camera torso pose estimation using graph neural networks}, author={Rodriguez-Criado, Daniel and Bachiller, Pilar and Bustos, Pablo and Vogiatzis, George and Manso, Luis J}, booktitle={2020 29th IEEE International Conference on Robot and Human Interactive Communication (RO-MAN)}, pages={827--832}, year={2020}, organization={IEEE}}